Definition of the Challenge

This Challenge solicits contributions that demonstrate efficient algorithms for point cloud compression. Moreover, new rendering schemes, evaluation methodologies, as well as publicly accessible point cloud content are encouraged to be submitted, in addition to the proposed compression solutions.

Significance and real-world value

The significant growth of 3D sensing technologies, along with the increased interest in adopting 3D representations in imaging systems, are shaping the way media content is created and used. In this environment, point clouds have become a viable solution, since they provide a practical way for capture, storage, delivery and rendering of volumetric content. Business and technology specialists predict that immersive applications using augmented and virtual reality will become mainstream by 2020. Point cloud representations are expected to be among modalities that will be extensively exploited, as they allow users to visualize static or dynamic scenes in a natural and immersive way. However, a high-quality point cloud content requires a vast amount of data which can hinder its use in both professional and consumer applications. Thus, there is a need for an interchange and delivery format that can efficiently reduce the volume of information required with minimal or no impact on quality. Despite efforts in the scientific and standardization communities, finding an efficient compression algorithm for point clouds remains an open issue. Furthermore, the performance of point cloud compression algorithms in the state of the art should be carefully analysed, to better understand their degree of suitability to cope with the needs of emerging applications.

Rules of participation

- Participants are requested to submit point cloud compression algorithms. The necessary material to validate the performance of each submission should follow the procedure described in the submission guidelines of this document. Additional to the proposed coding solutions, participants can optionally submit rendering schemes, evaluation methodologies (e.g., objective quality metrics), or additional point cloud content. Detailed technical descriptions are required for each submission.

- Participants are requested to pre-register (link to pre-registration) in the Challenge. Upon successful completion of the pre-registration, the proponents will receive the necessary data to validate the performance of their compression algorithm, as described in the submission guidelines. The participants are encouraged to complete this step the earliest possible but no later than by 1 May 2019.

- Participants are encouraged to submit a paper on their proposal (not mandatory) following ICIP 2019 guidelines. Accepted papers after peer review will be included as part of the International Conference on Image Processing Challenges (ICIPC) 2019 proceedings that will be published on IEEE Xplore.

Criteria of judgment

The following criteria will be applied to judge the submissions.

Point cloud compression algorithms (mandatory)

The participants are requested to deliver the necessary material to validate the performance of the proposed compression solution according to the guidelines specified in the submission guidelines and information provided after pre-registration. A detailed description of the methodology should be also provided, if a paper is not submitted. The efficiency of every proponent will be subjectively assessed, while objective quality metrics will be used as additional and indicative information. Subjective and objective evaluations will be carried out by at least two independent test laboratories and cross-checked to validate their reliability and repeatability.

Rendering schemes (optional)

Proposals for new rendering schemes are welcome along with point cloud compression algorithms to demonstrate any one or several of the following:

- Alternative rendering methodologies to visualize point cloud data.

- Rendering software that accompanies the proposed coding solutions to visualize the compressed point clouds.

Such proposals should provide the source code and accompanying executable(s). Moreover, a detailed description of the methodology should be provided, if a paper is not submitted.

Subjective evaluation methodologies and objective quality metrics (optional)

Proposals for new evaluation methodologies and objective quality metrics are welcome along with point cloud compression algorithms to demonstrate any one or several of the following:

- Alternative subjective evaluation methodologies that can assess visual quality of point clouds after compression, processing or manipulation.

- Alternative objective quality metrics that are well correlated with subjective assessment of point cloud quality over a range of manipulations including, but not limited, to compression.

- Methods that assess the quality of interaction with a compressed point cloud, such as random access, resilience to errors, progressive decoding, free-navigation, either in conventional displays or in immersive environments making use of head-mounted displays.

Such proposals, should provide the source code and accompanying executable(s). Moreover, a detailed description of the methodology should be provided, if a paper is not submitted.

Additional point cloud content (optional)

Proposals for new point cloud content motivated by existing or emerging use cases and application scenarios are welcome along with point cloud compression algorithms. Such proposals should provide a detailed description of the acquisition methodology for the newly introduced content, if a paper is not submitted. Moreover, such proposals should allow the use of the submitted content for research and standardization purposes.

Submission guidelines

These guidelines detail the ICIP 2019 Challenge on Point Cloud Coding and an overview of the information provided to pre-registered participants interested in contributing, and what is expected from them as deliverables.

Contents

Eight (8) point clouds are selected as test contents for this Challenge; four (4) of them are depicting human figures, and four (4) indicate static objects. The test contents denote a representative set of point clouds with limited volume that are commonly visualized from outside, with rather diverse characteristics in terms of geometry and color details. The contents have been preprocessed by down-sampling, rotation, translation, and scaling to fit in a minimum bounding box with origin point (0,0,0) and normalized size 1. The resulted point clouds denote the set of reference contents to be used in the Challenge. The dataset will be provided to participants after successful completion of their pre-registration step.

Format

The reference and the compressed contents point clouds using the anchor, are stored in .ply format with binary little endian. This format is selected to ensure interoperability and no precision errors. Moreover, .ply stands for the default format employed by software that will be used for objective quality assessment [1].

Bitrates

The participants are requested to compress each of the reference contents using five (5) target bitrates, namely, “R1”, “R2”, “R3”, “R4”, and “Rmax” that are expressed in target bits-per-point (bpp). The target bpp is computed as the total number of bits at the output of the encoder divided by the number of points of its corresponding original version. The participants must ensure that their encoders will produce a bitrate with no more than a ±5% deviation from the target bpp for the first four cases; failure to do so could result in disqualification of the contribution to the Challenge. Moreover, the participants are requested to provide a compressed version for each content that corresponds to the maximum quality that can be achieved by their proposed algorithm, indicated as “Rmax”. Depending on the nature of the proposed codec (i.e., lossless or lossy), the maximum quality could be achieved in either lossless or lossy way.

Anchor codec

The anchor codec for this Challenge is the compression scheme natively supported in Point Cloud Library (PCL) [2]. A reference with the description of this codec can be found in [3].

Rendering software

The CloudCompare [4] software is the default renderer to display the contents under assessment. Each point from every content will be represented by a square primitive of a fixed size, at different distance and perspectives.

Subjective quality assessment

Subjective quality assessment will be performed as primary criteria to evaluate compression efficiency of contributions and to compare them to the anchor. The following approach will be adopted in the design of the subjective experiments:

- A simultaneous Double-Stimulus Impairment Scale (DSIS) with 5-scale impairment rating will be used (5: Imperceptible, 4: Perceptible but not annoying, 3: Slightly annoying, 2: Annoying, 1: Very annoying). Human subjects will visualize both the reference and the compressed contents side-by-side and will rate the impairment of the compressed content with respect to the reference.

- Human subjects will visualize the contents on a desktop setup in test laboratories that follow the ITU-R Recommendation BT.500-13 [5].

- Human subjects will assess animated video sequences of the contents under assessment, where the models are captured from different points of view.

- A Mean Opinion Score and a corresponding Confidence Interval will be computed for each content over a statistically significant number of subjects, as per procedures defined in the ITU-R Recommendation BT.500-13 [5].

Objective quality metrics

Every compressed content at every target bitrate will be assessed using variations of the point-to-point for geometry, point-to-plane for geometry [1], point-to-point for color and projection-based [6] metrics. However, objective quality metrics will be computed as additional and indicative information to compare contributions to the anchor. Objective quality metrics won’t be used as primary criteria to compare the contributions.

Deliverables expected from participants

The following deliverables are expected from proponents:

- For each content and every target bitrate, proponents should report the exact bpp that their encoding solution achieves, based on a template that will be provided after .

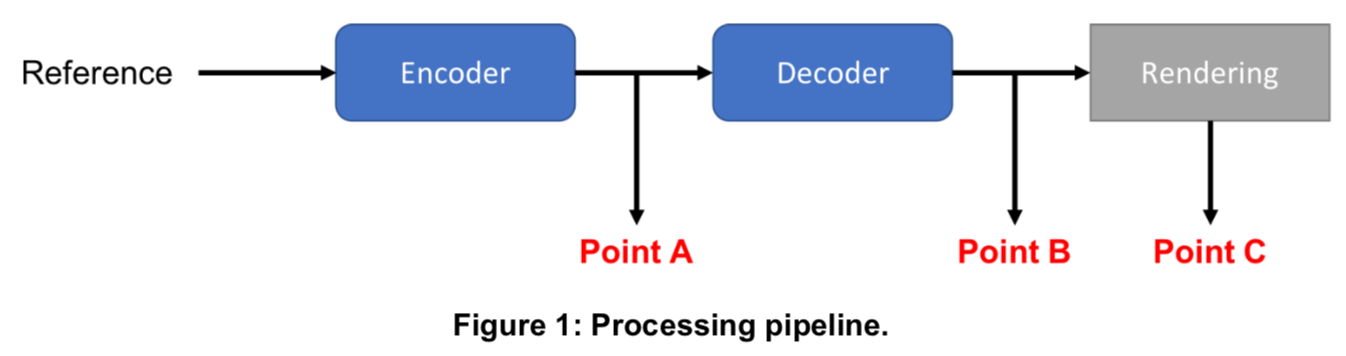

- For each content and every target bitrate, proponents should deliver a corresponding bitstream in a file, as indicated in Point A of Figure 1, to double-check the reported bpp that are produced from the proposed encoder.

- For each content and every target bitrate, the participants should deliver a corresponding file to assess the performance of the proposed encoding solution:

- In case the participants do not provide an optional rendering scheme, they are requested to provide a decoded point cloud ( Point B in Figure 1), for each compressed content. The same format (.ply) should be used. Moreover, participants can provide a suggested point size per decoded point cloud, in the default rendering software ( Point C in Figure 1).

- In case the proponents provide an optional rendering scheme to visualize the compressed point cloud data, they are requested to provide a decoded file ( Point B in Figure 1) along with their rendered data ( Point C in Figure 1), following the guidelines defined in section “Criteria of judgment”. The software should allow adjusting the visualization parameters such as distance and perspectives for navigation around the content, to enable fair comparison with respect to the default renderer.

One folder, namely, “Results” should be delivered to the organizers with a URL pointing to a cloud storage. The date and time of the contents will be used to assess eligibility of the submission. The structure and the naming convention that should be followed, will be communicated to the participants after successful completion of the pre-registration step.

Awards

Two types of awards will be granted to winners of the Challenge: 1) Best performing point cloud compression solution, 2) Most innovative point cloud compression solution.

Important dates

1 May 2019 Deadline for pre-registration to the Challenge

15 May 2019 Submission of contributions to the Challenge for evaluation

3 June 2019 27 May Submission of accompanying paper for possible publication at ICIP 2019 (optional)

1 July 2019 Notification of acceptance of the Challenge submissions and accompanying papers (optional)

15 July 2019 Camera ready submission of accepted papers (optional)

22 September 2019 Presentation of accepted contributions to the Challenge, results and winners awards at ICIP 2019

Organizers

Three test laboratories at EPFL, IT/UBI and UTS will run the subjective and objective evaluations and cross-check the results according to the plan described in the submission guidelines. The Challenge will be managed and coordinated by:

Touradj Ebrahimi, Professor at EPFL, Switzerland, touradj.ebrahimi@epfl.ch Antonio Pinheiro, Professor at IT/UBI, Portugal, pinheiro@ubi.pt Stuart Perry, Professor at UTS, Australia, stuart.perry@uts.edu.au

References

[1] D.Tian, H. Ochimizu, C. Feng, R. Cohen and A. Vetro, “Geometric distortion metrics for point cloud compression,” in 2017 IEEE International Conference on Image Processing (ICIP), 3460–3464 (September 2017). [2] R. B. Rusu and S. Cousins, “3d is here: Point cloud library (pcl),” in 2011 IEEE International Conference on Robotics and Automation, May 2011, pp. 1–4. [3] J. Kammerl, N. Blodow, R.B. Rusu, S. Gedikli, M. Beetz, and E. Steinbach, “Real-time compression of point cloud streams,” in 2012 IEEE International Conference on Robotics and Automation, May 2012, pp. 778-785. [4] “CloudCompare v2.10”, retrieved from http://www.cloudcompare.org/ [5] ITU-R BT.500-13, “Methodology for the subjective assessment of the quality of television pictures.” International Telecommunications Union, January 2012. [6] E.M. Torlig, E. Alexiou, T.A., Fonseca, R.L. de Queiroz, and T. Ebrahimi, “A novel methodology for quality assessment of voxelized point clouds,” in Applications of Digital Image Processing XLI (Vol. 10752, p. 107520I), International Society for Optics and Photonics, September 2018.